Enterprise AI Agents: Balancing Productivity and Data Governance

The promise of AI agents in the enterprise is compelling: autonomous systems that can handle complex workflows, make intelligent decisions, and dramatically accelerate business processes. Yet as organizations race to deploy these powerful tools, they're discovering a fundamental tension between maximizing productivity gains and maintaining robust data governance. Getting this balance right isn't just a technical challenge—it's a strategic imperative that will define which organizations thrive in the AI era.

Vector Databases vs Native Format: Choosing the Right Approach for AI-Ready Data

As organizations race to integrate AI capabilities into their operations, a critical question emerges: should you transform your company data into vector embeddings and store it in a specialized vector database, or keep it in its native format? This decision can significantly impact your AI implementation's performance, cost, and maintainability.

Data Management in System Integration: Best Practices for Success

In today's interconnected business landscape, companies rarely rely on a single software system. From CRM platforms to accounting software, from inventory management to marketing automation tools, modern organizations juggle multiple systems that need to work together seamlessly. This is where system integration becomes critical—and where data management can make or break your success.

HHS Interoperability: Navigating the Complex Landscape of Standards, Funding, and Future Directions

The promise of seamless data exchange across health and human services systems has long been a goal for government agencies. Yet despite decades of investment and policy initiatives, achieving true interoperability between case management, eligibility, and related systems remains elusive. As we move through 2025, the landscape is shifting rapidly with new standards, enforcement priorities, and funding mechanisms emerging alongside persistent challenges.

From Business Data to AI Features: Practical Feature Engineering for Enterprise Systems

Your organization has spent years—maybe decades—collecting data. Customer records, transaction histories, service requests, inventory movements, financial ledgers. The data is there, structured in tables, normalized for operational efficiency, and powering your day-to-day business.

Now you want to use that data for AI and machine learning. Maybe you're building predictive models, recommendation engines, or automated decision systems. But there's a problem: the data that runs your business isn't automatically ready to train AI models.

This is where feature engineering comes in—the process of transforming raw business data into meaningful inputs (features) that machine learning algorithms can actually learn from. And for many organizations, this step is both the most critical and the most overlooked part of an AI initiative.

The Importance of Data Profiling in Data Migration Projects

When organizations move data from one system to another—whether upgrading legacy systems, merging platforms after an acquisition, or adopting a modern cloud solution—data migration becomes a mission-critical task. Yet, one of the most overlooked aspects of a successful migration is data profiling. Skipping this step often leads to surprises during testing or go-live, when it’s too late (and too costly) to fix underlying data quality issues.

Data Quality and Data Governance: Building Trust in Your Data

Becoming a truly data-driven organization requires more than great analytics tools — it requires trust. That trust comes from two interdependent pillars: data quality and data governance.

At datrixa, we’ve seen firsthand that one cannot succeed without the other. Strong governance creates the structure for data quality to thrive, while quality ensures governance policies actually deliver value.

The Hidden Risk in Data Migration: Duplicate Sources and How to Handle Them

When organizations migrate data from legacy systems to new platforms, the spotlight often falls on mapping fields, transforming values, and ensuring completeness. But one critical challenge lurks in the background: duplicate sources of data. Overlooking duplicates can inflate storage costs, break downstream integrations, and undermine user trust in the new system. That’s why identifying, de-duplicating, and testing for duplicates should be a core part of every migration strategy.

The Impact of Effective Data Governance on Data Migration Projects

Data migration projects are often among the most complex undertakings within an organization. Whether moving to a new ERP, adopting cloud platforms, or consolidating legacy systems, these initiatives carry high stakes: downtime, data loss, regulatory exposure, and business disruption are all on the line. Too often, organizations underestimate the importance of data governance—only to discover that poor oversight leads to delays, unexpected costs, and compromised outcomes.

Effective data governance doesn’t just “support” data migration—it enables it. Let’s explore why.

Navigating Data Governance & Compliance During System Integration

When organizations undertake large system integration projects, much of the focus naturally falls on technical execution—connecting systems, synchronizing data, and ensuring uptime. Yet one of the most critical (and often underestimated) aspects is data governance and compliance. Without proper attention, integration projects can expose sensitive data, create regulatory risks, and undermine trust in the very systems they are meant to improve.

In this post, we’ll explore why governance matters during integration, the key compliance challenges to consider, and best practices to keep your projects on solid ground.

Why Cleaning and Preparing Your Data is Essential for AI Success

Artificial intelligence (AI) promises smarter decisions, greater efficiency, and the ability to uncover insights hidden in your data. But there’s one truth that can’t be ignored: AI is only as good as the data it’s fed.

If the data going into your AI systems is messy, incomplete, or inconsistent, the results will reflect those flaws. That’s why preparing and cleaning your data isn’t just a technical step—it’s a strategic priority.

Training and Supporting Users After a Data Migration

Addressing expectations, retraining on new structures, and providing change support

Data migration is one of the most critical phases of any digital transformation project. While IT teams focus on moving and validating data, the long-term success of the migration depends heavily on how well users adapt to the new system. Without proper training and support, even the most technically flawless migration can lead to confusion, frustration, and resistance among end-users.

Here’s how organizations can ensure smooth adoption by addressing expectations, retraining on new structures, and providing change support.

Creating a Collaborative Environment for Successful System Integrations

System integrations are rarely just about technology—they’re about people, processes, and communication. When multiple systems, stakeholders, and business units need to work together, the technical challenges are only half the battle. The real success of an integration project lies in fostering a collaborative environment where everyone involved can contribute, adapt, and stay aligned.

In today’s fast-paced digital landscape, where organizations are modernizing legacy systems and stitching together new platforms, collaboration is no longer optional—it’s a requirement for delivering value quickly and effectively.

Communicating Data Migration Status to Executives

Data migration projects are high-stakes initiatives. They impact business continuity, decision-making, and often come with tight timelines. While technical teams live in the details—schemas, ETL jobs, error logs—executives need a different kind of visibility. Communicating effectively with them can mean the difference between a perceived success and a project in trouble.

Designing a Practical Data Migration Architecture: Key Technical Considerations

When it comes to data migration, the temptation is to design the “perfect” architecture with the best tools money can buy. But in reality, migrations are short-term, high-intensity projects — you might only need the architecture for weeks or months. Overbuilding can mean wasting budget on tools that will gather dust once cutover is done.

A smart migration architecture is fit-for-purpose — it reuses what you already have, matches the project’s lifespan, and considers how the target environment will operate after migration.

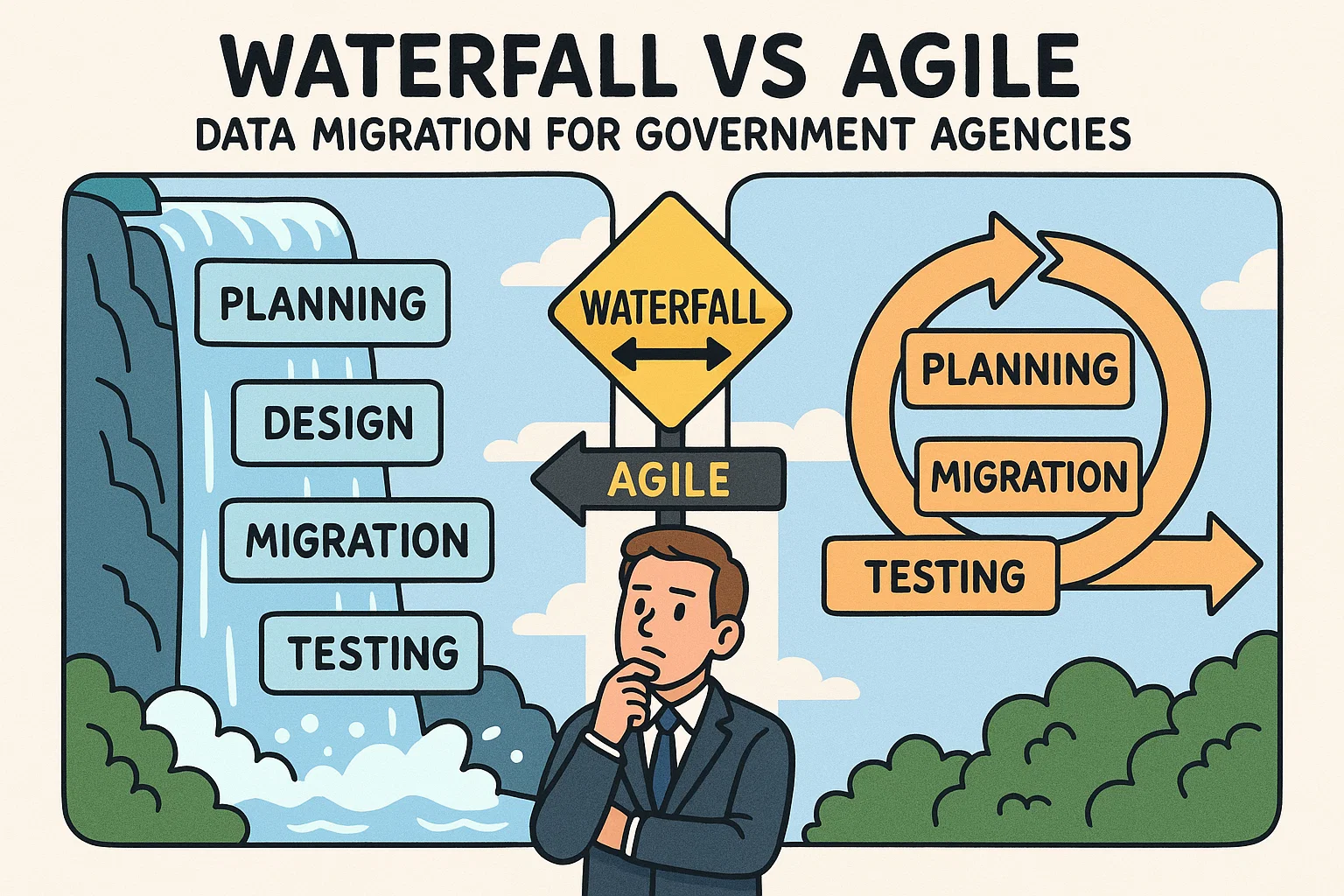

Agile vs Waterfall in Government Data Migration: What Works Best?

Government agencies are under increasing pressure to modernize legacy systems, comply with evolving regulations, and provide better digital services. At the heart of many of these initiatives is data migration—a technically complex and often high-risk effort that requires careful planning and execution.

One of the first decisions in any data migration project is choosing the right project management approach. Should you use a structured, phased Waterfall methodology? Or adopt a more flexible, iterative Agile model? For many government projects, the answer may be a blend of both.

Let’s explore the pros and cons of Waterfall and Agile in the context of public sector data migration—and how hybrid models can offer the best of both worlds.

Who Should Be on Your Data Migration Team?

Last week we described how important it is to involve the business, and not just IT on your your data migration project. This week we dive down a bit and discuss specific team roles that you should fill in order to make your project successful.

When planning a data migration, choosing the right technology and tools is only part of the equation. The real success factor? Assembling the right team.

Data migration projects are complex, high-risk initiatives that touch nearly every part of a business. Done right, they set your organization up for streamlined operations, better insights, and future growth. Done wrong, they can result in lost data, business disruptions, and damaged reputations. That's why you need more than just technical expertise—you need a multidisciplinary team with clear roles, responsibilities, and a shared understanding of the mission.

Data Migration Projects Must Involve the Business

Data migration is often viewed as a technical task—something for the IT department to handle quietly in the background. But treating data migration solely as an IT problem is one of the fastest ways to turn a project into a costly, delayed, and high-risk endeavor.

Whether moving to a new system, consolidating platforms after a merger, or modernizing legacy infrastructure, data migration must be recognized for what it truly is: a business-critical transformation. And that means the business must be actively involved.

Best Practice: Use a Data Migration Sandbox

When modernizing a legacy system, data migration is more than just a technical task — it’s a full-fledged program with deep dependencies on both legacy and target systems. Yet many teams overlook one of the most important resources for success:

A dedicated data migration sandbox for the new system.

While development environments are common, a migration-specific sandbox is often missing — and that’s a mistake. A data migration sandbox gives your team the space to test data loads, validate transformations, and work through the complexity of legacy-to-modern mappings — all without disrupting active development or risking production stability.

In this post, we’ll explain why having a dedicated migration sandbox is a best practice, what it should look like, and how to keep it aligned with your evolving target system.

Best Practice: Use a Copy of Production

One of the most common — and dangerous — shortcuts in legacy system modernization and data migration projects is working directly on the live production database.

Whether you’re profiling data, writing migration scripts, testing transformation logic, or validating mappings, using production data directly can introduce massive risk to your operations, compliance, and project timelines.

Best practice is simple but critical:

Always use a sanitized, secure, and up-to-date copy of the production database — never the production environment itself.

In this post, we explore the why behind this rule, the risks of violating it, and how to work safely and effectively with production data in a modern project.